Devops/Cloud engineering at Vayana¶

Vayana Network has always been a strong believer in automation and hence has been practicing "infrastructure as a code" for quite some time. It took us some time to really understand the DevOps philosophy and come up with a set of principles to lay down the foundation for our team to build automation solution as part of our DevOps practices.

Here is an attempt to portray our DevOps philosophy, principles and practices. This will be followed by multiple articles showing the technical details about many devops and cloud topics/solutions we have implemented here, but the principles I will be mentioning below will be at the core of all of them.

About us:¶

We as a small devops team have made small strides in bringing in the culture as well as automation needed to serve the ultimate goal of any startup including ours :

Deliver value and adapt faster with market needs and at scale

Our Devops motto¶

If I have to word in a simple paragraph what our Devops moto would be, it would be this:

Our goal is to reduce all the roadblocks on the way during the whole development and operations lifecycle right from the idea and development to deployment and observability with the help of tools,methodologies and shared responsibility.

In a nutshell:

DevOps is whatever you do to bridge friction created by silos, and all the rest is engineering. - Patrick Debois, Snyk

Principles:¶

1. Simplicity:¶

This is an unusual principle to be at the top, but that's what we keep in mind when developing solutions here at Vayana.

In Fact this is what you will see outside one of our meeting rooms at our Pune Office.

Complexity is your enemy. Any fool can make something complicated. It is hard to keep things simple. - Richard Branson

Given the multitude of tools and services at our disposal, there is always more than one way to get things done in Devops. Each approach has its own merits and tradeoffs. We focus on reaching a solution keeping following things in mind:

Its simple enough that

- everyone in the team can contribute

- everyone in the team is aware about the

whyof the solution - everyone in the team can troubleshoot/debug

- It can be automated in the long run

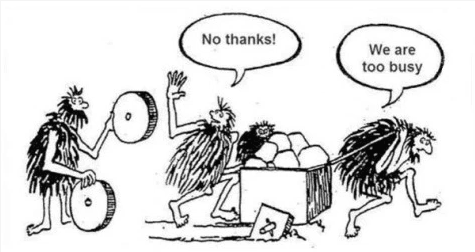

tip: not everything has to be automated. If you automate a in-efficient process, now you have an automated inefficient process :D

Which brings me to the fact that it's also important to be pragmatic. There is a fine line between simple( follows above principles) and too simple (can't scale out).

It's often a challenge to find the right balance between the two. Finding that balance is a difficult feat, but not impossible.

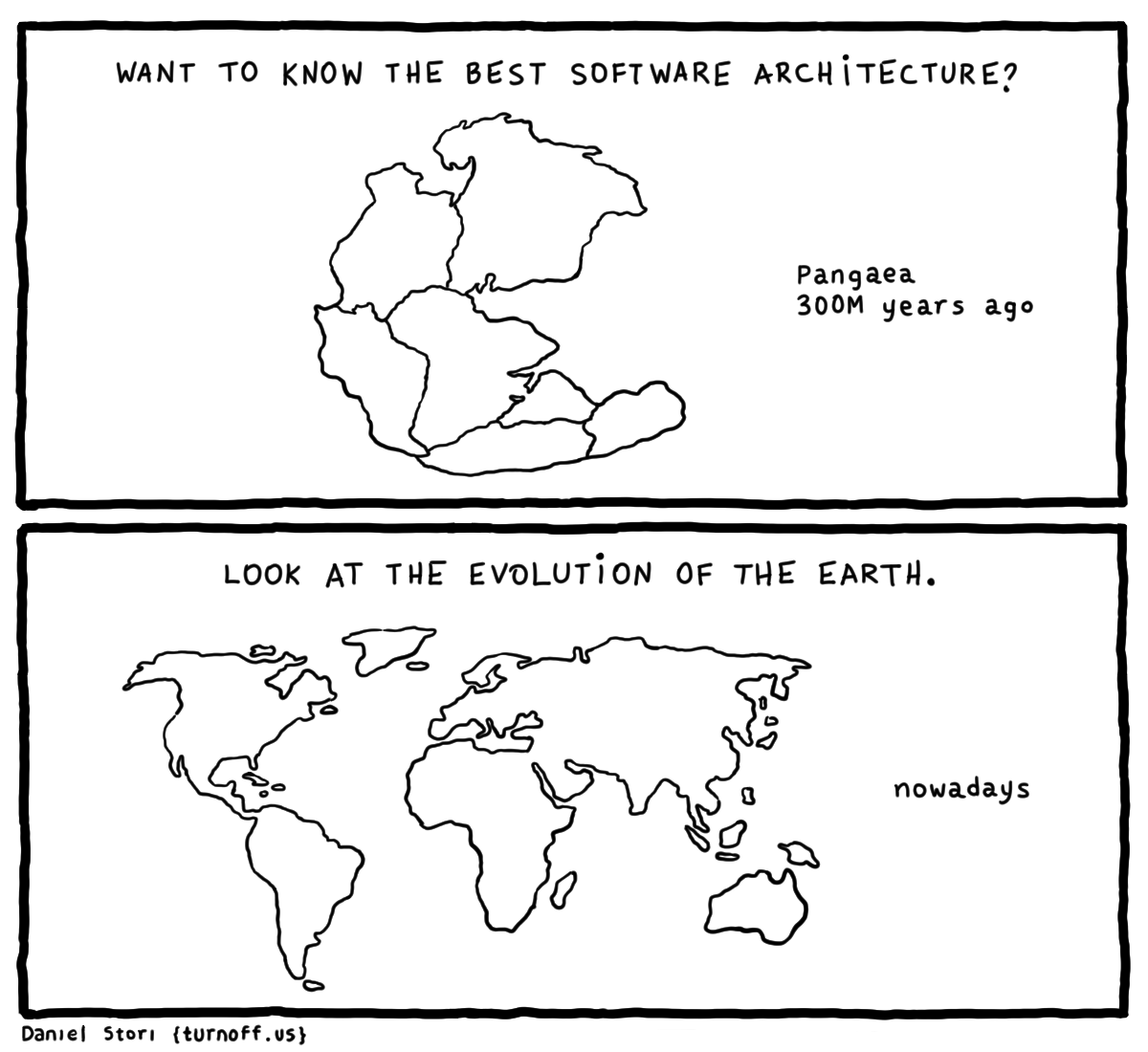

2. Micro,reliable & scalable¶

When it comes to designing infrastructures in AWS cloud, we spend time on ensuring following factors:

Microservices over monoliths

- Each service implements a single business capability within its bounded context.

- Services are loosely coupled, communicating through API's.

A microservice architecture is more complex to build and manage. It takes a lot of planning and requires a mature development and DevOps culture.

But done right it pays more dividends with a much more resilient architecture and faster innovation.

Design for failure over Design to avoid failures

- We design the infra in a highly available way instead of worrying about the outages in just one az.

- This is achieved by designing the network to span multiple availability zones and enable Elastic scaling based on the appropriate monitoring parameters. Will talk more on this in the Observability principle.

- It's always better to go back to the drawing board and plan the architecture in a redundant way then refactoring it later based on avoidable downtimes.

3. Managed Services and Serverless¶

We like managed services a lot. Given AWS is the best cloud provider out there, the managed services it offers are some of the best.

Given there are 200+ services in AWS, many times there are more than one service which may help you achieve a use case. The catch here is to know exactly the why of the solution you need to build, that will help you find which services suits your purpose better.

As Andy Jassy, AWS CEO rightly says "We dont believe in one tool to rule the world. We want you to use the right tool for the right job"

For a small team like ours, delegating the overhead of managing servers, keeping them patched, configuring them, monitoring them round-the-clock to the cloud service provider is of utmost importance. Hence we try to use as many serverless offerings as possible.

This allows us to focus more on the development of the tools and applications then the maintenance of the backend they work on.

Some of the serverless AWS services we use are:

- Fargate (to run our ECS containers)

- Lambda (as functions as a service which can get trigger based on events)

- Api Gateway

- SQS ( message queues)

- dynamodb

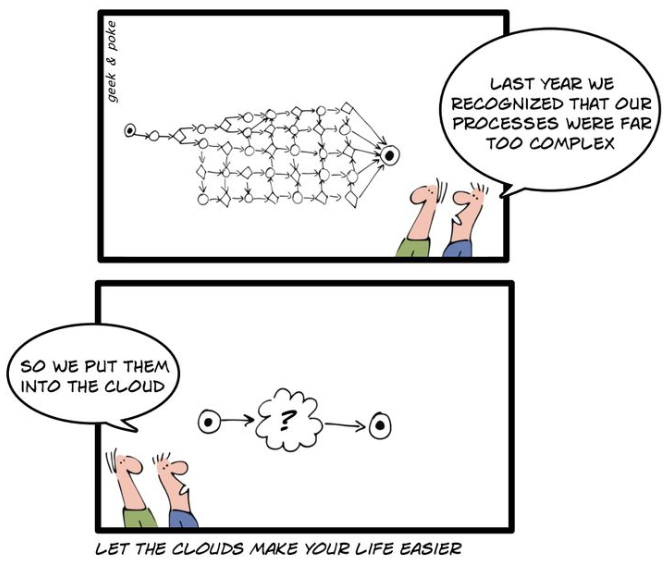

On a lighter note, this is how Cloud works :D

4. Declarative infrastructure and automate the rest:¶

Infra as code - Immutable infrastructure

- We manage all our infrastructure using Terraform. That is, each AWS resource part of the infrastructure has a counterpart in a terraform state file. Any changes to those infra components will be managed using terraform only.

- The new drift detection feature of terraform also helps to find if there has been any deviation in the configuration of the resource then what's mentioned in the code.

- Terraform also helps us quickly move our infra to other environments as per business need.

Automate

- Once a thing gets to a stage where it needs to be repeatedly executed and in a similar fashion everytime, that's the point where you bring in the automation guns.

- We use small bash and python scripts extensively to be either run manually or on a schedule to perform simple tasks. For example, adding a users ip in all the relevant security groups (firewalls) in AWS. This saves a lot of time for the user to go and add their ip manually in all the security groups individually.

- We also use ansible widely to configure our servers and laptops for hardening and optimizations.

- AWS Lambda is also a major service in our automation arsenal. There are many lambda's which perform small tasks on a schedule. Those small tasks can range from triggering s3 backups from cloudwatch to compiling observability stats from aws for the day and posting them on slack to relevant stakeholders.

Gotcha: There's this thin line between

what needs to be automated and is a value addversuswhat is automated for the sake of automating it. The latter is just a waste of time.

5. Observable¶

There is no defense to any fort without its watchtowers.

Any system needs a set of predefined metrics which teams can watch to monitor the state/performance of the system as well as a set of tools to debug and audit the system to explore or investigate the outages or irregularities in the system.

To do a good job with monitoring and observability, your teams should have the following:

- Reporting on the overall health of systems (Are my systems functioning? Do my systems have sufficient resources available?).

- Reporting on system state as experienced by customers (Do my customers know if my system is down and have a bad experience?).

- Monitoring for key business and systems metrics.

- Tooling to help you understand and debug your systems in production.

- Tooling to find information about things you did not previously know (that is, you can identify unknown unknowns). Access to tools and data that help trace, understand, and diagnose infrastructure problems in your production environment, including interactions between services.

Monitoring systems should not be confined to a single individual or team within an organization. Empowering all developers to be proficient with monitoring helps develop a culture of data-driven decision making and improves overall system debuggability, reducing outages.

Proper alerts have to be set up to the respective stakeholders. Each alert should have the relevant information from pointers below:

- What happened?

- When did it happen?

- Who initiated it?

- On what did it happen?

- From where was it initiated?

And its has to always be in a continuous improvement mode. We have to record our learning from outages and mistakes.The process of writing retrospectives or postmortems with corrective actions is well documented. One outcome of this process is the development of improved monitoring.

6. Culture of Shared responsibility and being open to new technologies¶

A culture in any organization stands on three pillars: People,process,products

Processes and products are important. But, people are always more important.

-

We have a culture where people can have a sense of shared responsibility

Main goal of devops is to break the silos. And here at Vayana the culture is such that everyone has the sense of ownership of the final quality of the product as well as its stability.

It's not ideal as a dev to see the outcomes of your work during deployment when everything blows up, leading to firefighting and burnout.

The developers at Vayana are well aware about the entire lifecycle of the deployment as well as the cloud architecture.

The cloud team too tries to understand the pain points/features of the application in the pipeline and help in either finding the best solution while leveraging all the cloud services as well as in helping optimize the performance of the application in the cloud environment.

Working in small batches, ideally single-piece flow helps in getting fast and continual feedback on our work

-

We have a culture where people can share ideas, processes and tools and explore new technologies.

When technical debt is treated as a priority and paid down and architecture is continuously improved and modernized, teams can work with flow, delivering better value sooner, safer, and happier.

We always keep looking for the latest updates which have come in the tools which we use. Eg. terraform,aws cli, aws sdk. We constantly look at the aws technical blog and other devops/aws forums to have an eye on what the industry is moving towards.

What do we use:¶

Here are the tools/services we use frequently in our day to day activities:

- Bash and python for small automations

- AWS Lambda for serverless functions

- AWS ECS +AWS Fargate for serverless container orchestration

- Terraform as Infra as code tool

- Ansible for configuration management, hardening

To end my thoughts, Rome was not built in a day and Devops is a continuous process. We will be nurturing the above principles as the scale of Vayana networks infrastructure grows exponentially just like our business ;)